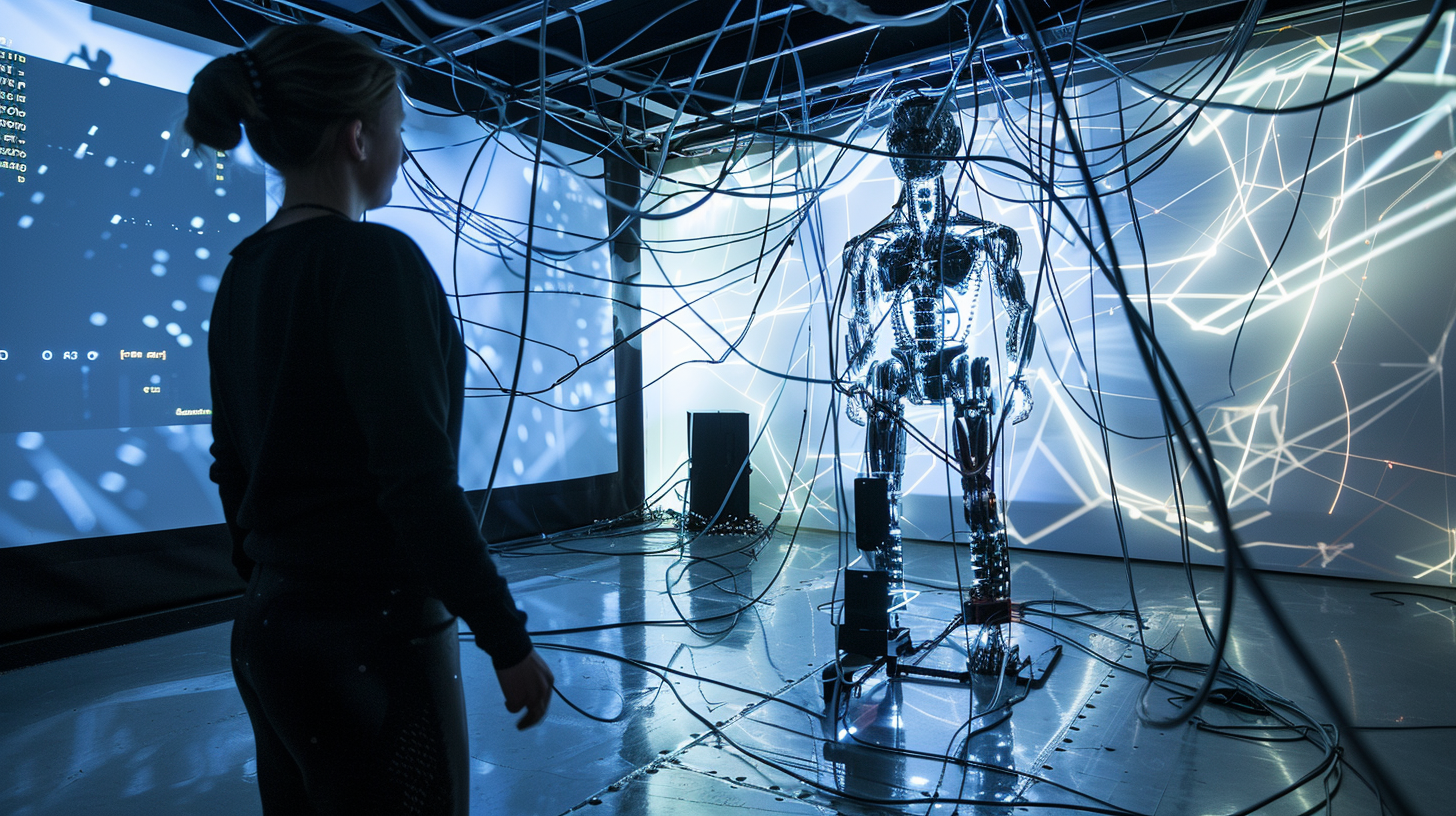

The integration of advanced AI in defense robotics unveils intricate ethical implications in the realm of autonomous warfare and military operations. This pioneering frontier challenges traditional concepts of accountability, morality, and decision-making processes as robots gain cognitive abilities and autonomy. The delicate balance between innovation and ethical responsibilities is vital for the harmonious coexistence of AI and robotics in defense contexts. As the boundaries blur between artificial and biological intelligence, navigating the ethical landscape becomes paramount to ensure the ethical deployment of AI technologies. Exploring the multifaceted facets of AI integration in defense reveals a complex web of ethical dilemmas and societal impacts that demand thoughtful consideration.

Key Takeaways

- Define boundaries of synthetic consciousness in defense robotics.

- Uphold ethical accountability in autonomous warfare systems.

- Address societal challenges and national security implications.

- Establish clear AI policy frameworks for responsible defense use.

- Engage in public discourse to navigate ethical dilemmas in AI integration.

Ethical Implications of AI Integration

The integration of advanced artificial intelligence (AI) in robotics presents a myriad of ethical implications that demand critical examination and thoughtful consideration. With the development of robots possessing synthetic consciousness, the field of robot ethics faces unprecedented challenges. The blurring lines between artificial and biological intelligence raise concerns about defining the boundaries of life and consciousness. Ethical debates intensify as society grapples with the implications of granting robots cognitive abilities and autonomy. Questions on accountability, morality in decision-making processes, and the potential risks of autonomous weapons further complicate the landscape of AI integration in robotics. As technology advances, navigating the delicate balance between innovation and ethical responsibilities becomes paramount for shaping a future where AI and robotics coexist harmoniously.

Defense Robotics and Autonomous Warfare

Advancing rapidly into the domain of defense, robotics and autonomous warfare are reshaping the landscape of military operations with unprecedented efficiency and complexity. Military autonomy allows machines to make critical decisions independently, influencing the strategic landscape. However, ethical accountability is essential, ensuring that these autonomous systems adhere to ethical standards and international laws. Concerns arise regarding the implications of delegating lethal capabilities to machines, questioning the morality and consequences of robot-driven warfare. Striking a balance between technological progress and ethical considerations is crucial to prevent unintended harm, maintain human oversight, and uphold values of freedom and justice in defense operations. As autonomous warfare evolves, the need for stringent ethical guidelines and frameworks becomes increasingly paramount to navigate this emerging frontier responsibly.

Societal Challenges and National Security

Moving beyond the realm of defense applications, the integration of advanced robotics and artificial intelligence poses intricate societal challenges intertwined with national security concerns. Job displacement and security anxiety come to the forefront, necessitating public engagement and robust AI policy creation to address these issues. The societal impact of AI in defense reverberates with worries about the reliance on AI for national security, potential geopolitical imbalances, and ethical dilemmas in combat scenarios. Balancing defense capabilities with ethical considerations requires multidisciplinary collaboration and proactive measures to navigate the evolving landscape of advanced robotics responsibly. As technology advances, ensuring public involvement in AI policy decisions becomes essential for managing the societal and national security implications appropriately.

Policy Responses to AI in Defense

Strategically navigating the intersection of artificial intelligence (AI) and defense requires a systematic approach to policy formulation that addresses the multifaceted challenges posed by advanced robotics. In shaping effective policies, the following considerations are central:

- Legislative Frameworks: Implementing clear and comprehensive laws to govern the use and development of AI in defense.

- Ethical Guidelines: Establishing ethical standards to ensure the responsible and humane application of AI technologies in military settings.

- International Cooperation: Promoting collaboration between nations to create harmonized regulations that foster ethical AI use globally.

- Transparency and Accountability: Enforcing mechanisms that ensure transparency in AI decision-making processes and hold individuals and organizations accountable for their actions.

Public Perception and Ethical Dilemmas

Perceptions of advanced robotics in defense have sparked a broad range of ethical dilemmas that demand critical examination in light of evolving technological capabilities. Public trust in AI systems, particularly in defense applications, hinges upon addressing moral implications surrounding autonomy, accountability, and transparency. As society navigates the complexities of integrating advanced AI in warfare, ethical considerations around civilian safety, privacy, and the potential for autonomous weapons raise significant concerns. Striking a balance between harnessing the benefits of AI for defense purposes while upholding ethical standards requires a collaborative effort from policymakers, technologists, and ethicists. Establishing frameworks that prioritize both national security and human values is essential to shaping public perception and fostering trust in the ethical deployment of advanced robotics in defense.

Interdisciplinary Collaboration in AI Ethics

Interdisciplinary collaboration plays a pivotal role in shaping ethical frameworks for the integration of artificial intelligence in defense technologies.

- Holistic Perspectives: Drawing insights from diverse fields like ethics, technology governance, psychology, and law enhances the multifaceted examination of AI ethics.

- Inclusive Decision-Making: Engaging experts from various disciplines ensures a comprehensive approach to addressing complex ethical dilemmas in AI development and deployment.

- Ethical Guidelines Development: Collaborating across disciplines aids in creating robust ethical guidelines that govern the responsible use of AI in defense contexts.

- Policy Implementation: Effective interdisciplinary collaboration fosters the translation of ethical principles into practical policies, enhancing the governance of AI technologies in defense applications.

Future Trends and Debates on Defense AI

Considering the rapid advancements in advanced AI technologies and their increasing integration into defense applications, the discourse surrounding the future trends and debates on Defense AI continues to intensify. Key issues revolve around AI regulation and military autonomy. The need for clear regulations on AI use in defense to ensure ethical deployment and accountability is becoming crucial. Simultaneously, the debate on military autonomy concerning the level of decision-making power delegated to AI systems in warfare scenarios is gaining prominence. Addressing these future trends and debates will require a delicate balance between technological advancements and ethical considerations to ensure the responsible and beneficial integration of AI in defense operations while avoiding potential risks and ethical dilemmas.

Frequently Asked Questions

How Can We Ensure Accountability for Actions Taken by Autonomous AI Robots in Defense Operations?

Ensuring accountability for actions taken by autonomous AI robots in defense operations necessitates a foundation of ethical programming and algorithmic transparency. Implementing ethical guidelines and regulations that mandate clear decision-making processes and accountability mechanisms can enhance oversight and mitigate risks of autonomous actions. Promoting transparency in AI algorithms and decision-making systems can foster trust and enable effective evaluation of actions taken by autonomous AI robots in defense scenarios.

What Measures Are in Place to Prevent Potential AI Biases From Influencing Military Decisions?

In addressing potential AI biases influencing military decisions, measures like algorithm transparency, bias mitigation strategies, and oversight mechanisms are crucial. Ethical evaluation of data sources, continuous monitoring for bias, and diverse input ensure fair decision-making. Public awareness, thorough testing, and accountability frameworks uphold military decision-making ethics, fostering trust in AI systems. Balancing technical capabilities with ethical considerations safeguards against harmful biases, promoting responsible and effective military AI utilization.

Are There Discussions on Creating International Agreements to Regulate the Use of AI in Defense?

Discussions on establishing global agreements to regulate AI in defense are ongoing. The focus is on mitigating unintended consequences through diplomatic means. Addressing ethical concerns, nations are considering frameworks to ensure responsible AI use. Collaboration among countries is essential to develop rules governing AI in warfare, promoting transparency and accountability. Efforts to balance technological advancements with ethical considerations underscore the importance of international cooperation in shaping AI regulations for defense purposes.

How Do We Address the Ethical Implications of Using AI in Combat Scenarios, Especially in Urban Warfare?

Urban warfare challenges ethical considerations in AI use due to increased civilian presence. Balancing combat effectiveness with minimizing collateral damage becomes crucial. Ensuring AI systems prioritize distinction between combatants and non-combatants is vital. Implementing strict rules of engagement and oversight mechanisms can mitigate risks. Emphasizing ethical training for AI developers and operators is essential to navigate the complexities of urban warfare scenarios responsibly.

What Steps Are Being Taken to Protect Civilian Populations From the Risks Associated With Advanced AI Weaponry?

Efforts to protect civilian populations from risks linked to advanced AI weaponry entail establishing robust ethical frameworks ensuring accountability and preventing biases. Implementing stringent regulations, clear rules of engagement, and oversight mechanisms can curb unintended harm. Emphasizing transparency, ethical AI development, and engaging diverse stakeholders play pivotal roles. Collaborating with AI experts, ethicists, and affected communities can uphold principles of humanitarian law, prioritize civilian well-being, and prevent potential ethical breaches.